Jitendra Singh Jat

Jitendra Singh Jat - Online marketing latest and upcoming information.

Tuesday, March 11, 2014

Thursday, October 03, 2013

How to Build Links Using Expired Domains

How to Find Dropped/Expired/Expiring Domains?

How to Vet Expired Domains

- Check to see what domains 301 redirect to them. I use Link Research Tools for this as you can run a backlink report on the domain in question and see the redirects. If you find a domain that has 50 spammy 301s pointing to it, it may be more trouble that it's worth. Preventing a 301 from coming through when you don't control the site that redirects is almost impossible. You can block this on the server level but that won't help you with your site receiving bad link karma from Google. In that case, you may have to disavow those domains.

- Check their backlinks using your link tool of choice. Is the profile full of nothing but spam that will take ages to clean up or will you have to spend time disavowing the links? If so, do you really want to bother with it? If you want to buy the domain to use for a 301 redirect and it's full of spammy links, at least wait until you've cleared that all up before you 301 it.

- Check to see if they were ever anything questionable using the Wayback Machine. If the site simply wasn't well done 2 years ago, that's not nearly as big of a problem as if you're going to be using the site for educating people about the dangers of lead and it used to be a site that sold Viagra.

- Check to see if the brand has a bad reputation. Do some digging upfront so you can save time disassociating yourself from something bad later. You know how sometimes you get a resume from a person and you ask an employee if they know this Susan who also used to work at the same place that your current employee worked years ago and your employee says "oh yes I remember her. She tried to burn the building down once"? Well, Susan might try to burn your building down, too.

- Check to see if they were part of a link network. See what other sites were owned by the same person and check them out too.

- Check to see if they have an existing audience. Is there an attached forum with active members, are there people generally commenting on posts and socializing them, etc.?

How Should You Use Expired Domains?

Some Good Signs of Expired Domains

- Authority links that will pass through some link benefits via a 301 redirect (if I'm going that route.)

- An existing audience of people who regularly contribute, comment, and socialize the site's content (if I'm going to use it as a standalone site.) If I'm looking to buy a forum, for example, I'd want to make sure that there are contributing members with something to offer already there. If I want a site that I will be maintaining and adding to and plan to build it out further, seeing that there's an audience of people reading the content, commenting on it, and socializing it would make me very happy.

- A decent (and legitimate) Toolbar PageRank (TBPR) that is in line with where I think it should be. If I see a site that is 7 months old and has a TBPR of 6, I'll obviously be suspicious, and if I found one that was 9 years old and was a TBPR 1, I would hestitate before using it, for example. I also have to admit that while I don't rely on TBPR as a defining metric of quality, I'd be crazy to pretend that it means nothing so it's definitely something I look at.

- A domain age of at least 2 years if I was going to do anything other than hold it and try to resell it.

- Internal pages that have TBPR. If there are 5000 pages and only the homepage has any TBPR, I'd be a bit suspicious about why no internal pages had anything.

A Few Red Flags of Expired Domains

- Suspicious TBPR as mentioned above.

- The domain isn't indexed in Google. Even if you look at a recently expired site and see it has a TBPR of 4 with good Majestic flow metrics, is 5 years old, and has been updated in some way until it expired (whether through new blog posts, comments, social shares, etc.), it's safe to ssume it's not indexed for a good reason and you probably want to stay away from it.

- Backlink profile is full of nothing but spam.

- All comments on the site's posts are spammy ones and trackbacks.

Bottom Line: Is Using Expired Domains a Good Idea?

After '(Not Provided)' & Hummingbird, Where is Google Taking Us Next?

Search Today

1. '(Not Provided)'

The recent extension to "(not provided)" for 100 percent of organic Google keywords in Google Analytics got a lot of people up in arms. It was called "sudden", even though it ramped up over a period of two years. I guess "it suddenly dawned on me" would be more accurate.

The recent extension to "(not provided)" for 100 percent of organic Google keywords in Google Analytics got a lot of people up in arms. It was called "sudden", even though it ramped up over a period of two years. I guess "it suddenly dawned on me" would be more accurate.2. Hummingbird

Now let's look at the other half of that double-tap: Hummingbird. Since Google's announcement of the new search algorithm, there have been a lot of statements that fall on the inaccurate end of the scale. One common theme seems to be referring to it as the biggest algo update since Caffeine.

Now let's look at the other half of that double-tap: Hummingbird. Since Google's announcement of the new search algorithm, there have been a lot of statements that fall on the inaccurate end of the scale. One common theme seems to be referring to it as the biggest algo update since Caffeine.Why Might they be Related?

Where is Search Heading Next?

Here's where I think the Knowledge Graph plays a major role. I've said many times that I thought Google+ was never intended to be a social media platform; it was intended to be an information harvester. I think that the data harvested was intended to help build out the Knowledge Graph, but that it goes still deeper.

Here's where I think the Knowledge Graph plays a major role. I've said many times that I thought Google+ was never intended to be a social media platform; it was intended to be an information harvester. I think that the data harvested was intended to help build out the Knowledge Graph, but that it goes still deeper.Tuesday, June 25, 2013

How to Make Your Keywords Fit Your Marketing Messaging

Who Are You?

- If your company was a car, how would you describe it?

- How would you describe your company culture?

- What are your company's core values?

- What's your company's mission? The vision?

- Who do you want to buy from your company?

- Who are the decision makers? Decision influencers?

Market Research

- How do you find a company for XXXX?

- What would you type into Google to find these companies?

- If you were looking for advice on XXXX, what would you do?

- What's the most important thing you look for on a XXXX company's home page?

Informational vs. Commercial Searches

- Informational: Your user is still researching and just wants more information about a topic.

- Commercial: Your user is looking for a business and ready to buy.

Lastly Go To Google

- Target audience

- Services or products

- Price points

- Messaging and positioning

6 Reasons Why Your Google Adwords For Small Businesses Is Not Working?

Thursday, May 23, 2013

Tuesday, December 27, 2011

Thursday, January 20, 2011

Too Good

God : Sirf 1 hi andar ja sakta hai....

1st : Main Brahmin hu, sari umar aapki seva ki hai. Swarg pe mera hak hai....

2nd : Main Doctor hu, sari umar logo ki seva ki hai. Swarg pe mera haq hai....

3rd : Maine IT MEIN JOB KI HAI.... ......

God : Bas... aage kuch mat bol.... Rulaayega kya pagle..? Andar aa ja......... Tera forwarded mails, follow-ups, bench pe 2years, night shifts, PM se panga, CTC se zaada deductions, pick-up drop ka lafda , client meetings, delivery dates, weekends mein kaam, kam umar mein baalon ka zhadna - safed hona, motape ka problem, etc etc…. mere ko senti kar diya yaar…..aja jaldi andar aja…..

Monday, November 08, 2010

Footer Link Optimization for Search Engines and User Experience

Site after site that I visit lately has been showing a tendency for using footer links to run their internal SEO link structure and anchor text optimization. While this practice in years past held value, today I rarely ever recommend it (and yes, SEOmoz itself will be moving away from using our footer for category links soon). Here's why:

- Footer links may be devalued by search engines automatically

Check out the evidence - Yahoo! says they may devalue footer links, Bill Slawski uncovers patents suggesting the same and anecdotal evidence suggests Google might do this (or go further) as well. Needless to say, if you want to make sure your links are passing maximum value, it's wise to avoid the footer (particularly the footer class itself). - Footer links are often not the first link on the page to a URL

Since we know that the first link on a page is the one whose anchor text counts and footer links, while anchor text optimized, are often a second link to an already-linked-to target, they are likely not to have the desired impact. - Footer links get very low CTR

Naturally, since they're some of the least visible links on a webpage, they receive very little traffic. Thus, if algorithms like BrowseRank or other traffic metrics start to play a role in search rankings, footers are unlikely to have a positive impact. - Footer links often take a page beyond a healthy link total

Many pages that already have 80-100 links on the page are going to lose out when they add a footer with another 30-50 links embedded. The link juice passed per link will go down and the value of each individual link is lowered. - Footer links can be a time suck

The time you spend crafting the perfect link structure in the footers could be put towards more optimal link structures throughout the site's navigation and cross-linking from content, serving both users and engines better.

That's not to say I don't suggest doing a good job with your footers. Many sites, large and small, will continue to use the footer as a resource for link placement and, just as with all other SEO tactics that fade, they do carry some residual value. Let's walk through a few examples of both good and bad to get a sense for what works:

Thumbs Up: Shopper.Cnet.com

I like the organization, the clear layout, the visibility and the fact that they've distinguished (through straight HTML links vs. drop downs) which links deserve to pass link juice and ranking value. I'm also impressed that I actually see a "Paris Hilton" link in the footer yet am not completely unaccepting of the idea that it could be there entirely naturally, simply as a result of what's popular on CBS.

Thumbs Down: Hawaii-Aloha.com

These are my least favorite kinds of footers. The links are just squashed together, the focus is obviously on anchor text, not relevance, the links are hard to see and read and there's little thought given to users. The links don't even look necessarily clickable until you hover.

Thumbs Up: VIPRealtyInfo.com

When I searched for "Dallas Condos", I was sure I'd find some examples for thumbs down, which is why I was so thrilled to find VIPRealtyInfo, a clearly competitive site in a tough SEO market doing a lot of things right. Yeah, there's some reasonable optimization in the anchor text, but it's definitely not overboard and the links are useful to people and search engines. The visual layout and design quality gives it an extra boost, too - something that can't be overstated in importance when it comes to potential manual reviews by the engines.

Thumbs Down: ABoardCertifiedPlasticSurgeonResource.com

The site's done a great job with design - it's really quite an attractive layout and color scheme. The links in the "most popular regions" aren't that bad; it's really the number of them that makes me worried. If they'd stuck to one column, I think they'd easily pass a manual review and pass good link juice (rather than spreading it out with so many links in addition to everything else on the page). The part that really sent me over the edge though was the two sentences in the green box, laden with links I didn't even realize were there until I hovered. Technically, there's nothing violating the search guidelines, but I wouldn't put it past the engines to come up with smart ways to devalue links like these, particularly when their focus is so clearly on anchor text, not user value.

Thumbs Up: Food.Yahoo.com

Again - great organization, good crosslinking (remaining relevant, then branching out to other network properties) and solid design. Even the most aggressive of the links on the right hand side appear natural and valuable to users, making it hard for an engine to argue they shouldn't pass full value.

Thumbs Sideways: DeviantArt.com

It's huge - seriously big. And while it's valuable for users and even contains some interesting content, it's not really accomplishing the job of a footer - it's more like a giant permanent content block on the site. The arrow that lets you close it is a good feature, and the design is solid, too. However, the link value really isn't there and the potential for big blocks of duplicate content across the site makes me a bit nervous, too.

So what can we take away from these analyses? A few general footer-for-SEO rules of thumb:

- Don't overstuff keywords in anchor text

- Make the links relevant and useful

- Organize links intelligently - don't just throw them into a big list

- Cross-linking is OK, just do it naturally (and in a way that a manual review could believe it's not solely for SEO purposes)

- Be smart about nofollows - nearly every footer on the web has a few links that don't need to be followed, so think about whether your terms of service and legal pages really require the link juice you're sending

- Make your footers look good and function well for users to avoid being labeled "manipulative" during a quality rater's review

Best Practices for Content Optimization

Is it possible that in all the years we've been writing at SEOmoz, there's never been a solid walkthrough on the basics of content optimization? Let's fix that up.

First off, by content, I don't mean keyword usage or keyword optimization. I'm talking about how the presentation and architecture of the text, image and multimedia content on a page can be optimized for search engines. The peculiar part is that many of these recommendations are second-order effects. Having the right formatting or display won't necessarily boost your rankings directly, but through it, you're more likely to earn links, get clicks and eventually benefit in search rankings. If you regularly practice the techniques below, you'll not only earn better consideration from the engines, but from the human activities on the web that influence their algorithms.

Content Structure

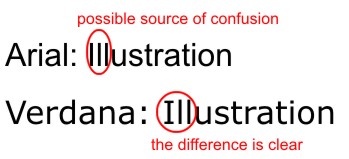

Because SEO has become such a holistic part of website improvement, it's no surprise that content formatting - the presentation, style and layout choices you select for your content - are a part of the process. Choosing sans serif fonts like Arial and Helvetica are wise choices for the web; Verdana in particular has received high praise from usability/readability experts, such as this article from WebAIM:

Verdana is one of the most popular of the fonts designed for on-screen viewing. It has a simple, straightforward design, and the characters or glyphs are not easily confused. For example, the upper-case "I" and the lower-case "L" have unique shapes, unlike Arial, in which the two glyphs may be easily confused.

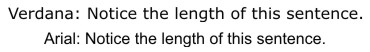

Another advantage of Verdana is that the spacing between letters. One consideration to take into account with Verdana is that it is a relatively large font. The words take up more space than words in Arial, even at the same point size.

The larger size improves readability, but also has the potential of disrupting carefully-planned page layouts.

Font choice is accompanied in importance by sizing & contrast issues. Type smaller than 10pt is typically very challenging to parse and in all cases, relative font sizes are recommended so users can employ browser options to increase/decrease if necessary. Contrast - the color difference between the background and text is also critical - legibility usually drops for anything that isn't black (or very dark) on a white background.

Content length is another critical piece of the optimization puzzle that's mistakenly placed in the "keyword density" or "unique content" buckets of SEO. In fact, content length can have a big role to play in whether your material is easy to consume and easy to share. Lengthy pieces often don't fare particularly well on the web, while short form and easily-digestible content often has more success. Sadly, splitting long pieces into multiple segments frequently backfires, as abandonment increases while link-attraction falls - the only benefit is page views per visit (which is why so many CPM-monetized sites employ this tactic).

Last but not least in content structure optimization is the display of the material. Beautiful, simplistic, easy-to-use and consumable layouts garner far more readership and links than poorly designed content wedged between ad blocks that threaten to overtake the page. I'd recommend checking out The Golden Ratio in Web Design from NetTuts, which has some great illustrations and advice on laying out web content on the page.

CSS & Semantic Markup

CSS is commonly mentioned as a "best practice" for general web design & development, but its principles coincide with many SEO guidelines as well. First, of course, is web page size. Google used to recommend keeping pages under 101K and, although most suspect that's no longer an issue, keeping file size low means faster load times, lower abandonment rates and a higher probability of being fully indexed, fully read and more frequently linked-to.

CSS can also help with another hotly debated issue: code to text ratio. Some SEOs swear that making code to text ratio smaller (so there's less code and more text) can help considerably on large websites with many thousands of pages. My personal experience showed this to be true (or, at least, appeared to be true) only once, but since good CSS makes it easy, there's no reason not to make it part of your standard operating procedure for webdev. Use tableless CSS stored in external files & keep Javascript calls external and follow in the path of CSS Zen.

Finally, CSS provides an easy means for "semantic" markup. For a primer, see Digital Web Magazine's article, Writing Semantic Markup. For SEO purposes, there are only a few primary tags that apply and the extent of microformats interpretation (using tags like

Content Uniqueness & Depth

The final portion of our content optimization discussion is the most important. Few can debate the value the engines place on robust, unique, value-adding content. Google in particular has had several rounds of kicking "low quality content" sites out of their indices, and the other engines have followed suit.

The first critical designation to avoid is "Thin Content" - an insider phrase that (loosely) means that which the engines do not feel contributes enough unique material to display a page competitively in the search results. The criteria have never been officially listed, but I have seen & heard many examples/discussions from engineers and would place the following on my list:

- 30-50 unique words, forming unique, parseable sentences that other sites/pages do not have

- Unique HTML text content, different from other pages on the site in more than just the replacement of key verbs & nouns (yes, this means all those sites that build the same page and just change the city and state names thinking it's "unique")

- Unique titles and meta description elements - if you can't write unique meta descriptions, just exclude them. I've seen similarity algos trip up pages and boot them from the index simply for having near-duplicate meta tags.

- Unique video/audio/image content - the engines have started getting smarter about identifying and indexing pages for vertical search that wouldn't normally meet the "uniqueness" criteria

BTW - You can often bypass these limitations if you have a good quantity of high value, external links pointing to the page in question (though this is very rarely scalable) or an extremely powerful, authoritative site (note how many one sentence Wikipedia stub pages still rank).

The next criteria from the engines demands that websites "add value" to the content they publish, particularly if it comes from (wholly or partially) a secondary source. This most frequently applies to affiliate sites, whose re-publishing of product descriptions, images, etc. has come under search engine fire numerous times. In fact, we've recently dealt with this issue on several sites and concluded it's best to anticipate manual evaluations here even if you've dodged the algorithmic sweep. The basic tenets are:

- Don't simply re-publish something that's found elsewhere on the web unless your site adds substantive value to users

- If you're hosting affiliate content, expect to be judged more harshly than others, as affiliates in the SERPs are one of users' top complaints about search engines

- Small things like a few comments, a clever sorting algorithm or automated tags, filtering, a line or two of text, or advertising does NOT constitute "substantive value"

For some exemplary cases where websites fulfill these guidelines, check out the way sites like C|Net (example), UrbanSpoon (example) or Metacritic (example) take content/products/reviews from elsewhere, both aggregating AND "adding value" for their users.

Last, but not least, we have the odd (and somewhat unknown) content guideline from Google, in particular, to refrain from "search results in the search results" (see this post from Google's WebSpam Chief, including the comments, for more detail). Google's stated feeling is that search results generally don't "add value" for users, though others have made the argument that this is merely an anti-competitive move. Whatever the motivation, here at SEOmoz, we've cleaned up many sites' "search results," transforming them into "more valuable" listings and category/sub-category landing pages, and have had great success recovering rankings and gaining traffic from Google.

In essence, you want to avoid the potential for being perceived (not necessarily just by an engine's algorithm but by human engineers and quality raters) as search results. Refrain from:

- Pages labeled in the title or headline as "search results" or "results"

- Pages that appear to offer a query-based list of links to "relevant" pages on the site without other content (add a short paragraph of text, an image, and a formatting that makes the "results" look like detailed descriptions/links instead)

- Pages whose URLs appear to carry search queries, e.g. ?q=seattle+restaurants vs. /seattle-restaurants