Is it possible that in all the years we've been writing at SEOmoz, there's never been a solid walkthrough on the basics of content optimization? Let's fix that up.

First off, by content, I don't mean keyword usage or keyword optimization. I'm talking about how the presentation and architecture of the text, image and multimedia content on a page can be optimized for search engines. The peculiar part is that many of these recommendations are second-order effects. Having the right formatting or display won't necessarily boost your rankings directly, but through it, you're more likely to earn links, get clicks and eventually benefit in search rankings. If you regularly practice the techniques below, you'll not only earn better consideration from the engines, but from the human activities on the web that influence their algorithms.

Content Structure

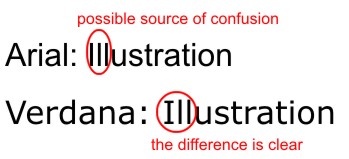

Because SEO has become such a holistic part of website improvement, it's no surprise that content formatting - the presentation, style and layout choices you select for your content - are a part of the process. Choosing sans serif fonts like Arial and Helvetica are wise choices for the web; Verdana in particular has received high praise from usability/readability experts, such as this article from WebAIM:

Verdana is one of the most popular of the fonts designed for on-screen viewing. It has a simple, straightforward design, and the characters or glyphs are not easily confused. For example, the upper-case "I" and the lower-case "L" have unique shapes, unlike Arial, in which the two glyphs may be easily confused.

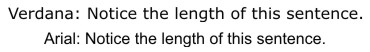

Another advantage of Verdana is that the spacing between letters. One consideration to take into account with Verdana is that it is a relatively large font. The words take up more space than words in Arial, even at the same point size.

The larger size improves readability, but also has the potential of disrupting carefully-planned page layouts.

Font choice is accompanied in importance by sizing & contrast issues. Type smaller than 10pt is typically very challenging to parse and in all cases, relative font sizes are recommended so users can employ browser options to increase/decrease if necessary. Contrast - the color difference between the background and text is also critical - legibility usually drops for anything that isn't black (or very dark) on a white background.

Content length is another critical piece of the optimization puzzle that's mistakenly placed in the "keyword density" or "unique content" buckets of SEO. In fact, content length can have a big role to play in whether your material is easy to consume and easy to share. Lengthy pieces often don't fare particularly well on the web, while short form and easily-digestible content often has more success. Sadly, splitting long pieces into multiple segments frequently backfires, as abandonment increases while link-attraction falls - the only benefit is page views per visit (which is why so many CPM-monetized sites employ this tactic).

Last but not least in content structure optimization is the display of the material. Beautiful, simplistic, easy-to-use and consumable layouts garner far more readership and links than poorly designed content wedged between ad blocks that threaten to overtake the page. I'd recommend checking out The Golden Ratio in Web Design from NetTuts, which has some great illustrations and advice on laying out web content on the page.

CSS & Semantic Markup

CSS is commonly mentioned as a "best practice" for general web design & development, but its principles coincide with many SEO guidelines as well. First, of course, is web page size. Google used to recommend keeping pages under 101K and, although most suspect that's no longer an issue, keeping file size low means faster load times, lower abandonment rates and a higher probability of being fully indexed, fully read and more frequently linked-to.

CSS can also help with another hotly debated issue: code to text ratio. Some SEOs swear that making code to text ratio smaller (so there's less code and more text) can help considerably on large websites with many thousands of pages. My personal experience showed this to be true (or, at least, appeared to be true) only once, but since good CSS makes it easy, there's no reason not to make it part of your standard operating procedure for webdev. Use tableless CSS stored in external files & keep Javascript calls external and follow in the path of CSS Zen.

Finally, CSS provides an easy means for "semantic" markup. For a primer, see Digital Web Magazine's article, Writing Semantic Markup. For SEO purposes, there are only a few primary tags that apply and the extent of microformats interpretation (using tags like

Content Uniqueness & Depth

The final portion of our content optimization discussion is the most important. Few can debate the value the engines place on robust, unique, value-adding content. Google in particular has had several rounds of kicking "low quality content" sites out of their indices, and the other engines have followed suit.

The first critical designation to avoid is "Thin Content" - an insider phrase that (loosely) means that which the engines do not feel contributes enough unique material to display a page competitively in the search results. The criteria have never been officially listed, but I have seen & heard many examples/discussions from engineers and would place the following on my list:

- 30-50 unique words, forming unique, parseable sentences that other sites/pages do not have

- Unique HTML text content, different from other pages on the site in more than just the replacement of key verbs & nouns (yes, this means all those sites that build the same page and just change the city and state names thinking it's "unique")

- Unique titles and meta description elements - if you can't write unique meta descriptions, just exclude them. I've seen similarity algos trip up pages and boot them from the index simply for having near-duplicate meta tags.

- Unique video/audio/image content - the engines have started getting smarter about identifying and indexing pages for vertical search that wouldn't normally meet the "uniqueness" criteria

BTW - You can often bypass these limitations if you have a good quantity of high value, external links pointing to the page in question (though this is very rarely scalable) or an extremely powerful, authoritative site (note how many one sentence Wikipedia stub pages still rank).

The next criteria from the engines demands that websites "add value" to the content they publish, particularly if it comes from (wholly or partially) a secondary source. This most frequently applies to affiliate sites, whose re-publishing of product descriptions, images, etc. has come under search engine fire numerous times. In fact, we've recently dealt with this issue on several sites and concluded it's best to anticipate manual evaluations here even if you've dodged the algorithmic sweep. The basic tenets are:

- Don't simply re-publish something that's found elsewhere on the web unless your site adds substantive value to users

- If you're hosting affiliate content, expect to be judged more harshly than others, as affiliates in the SERPs are one of users' top complaints about search engines

- Small things like a few comments, a clever sorting algorithm or automated tags, filtering, a line or two of text, or advertising does NOT constitute "substantive value"

For some exemplary cases where websites fulfill these guidelines, check out the way sites like C|Net (example), UrbanSpoon (example) or Metacritic (example) take content/products/reviews from elsewhere, both aggregating AND "adding value" for their users.

Last, but not least, we have the odd (and somewhat unknown) content guideline from Google, in particular, to refrain from "search results in the search results" (see this post from Google's WebSpam Chief, including the comments, for more detail). Google's stated feeling is that search results generally don't "add value" for users, though others have made the argument that this is merely an anti-competitive move. Whatever the motivation, here at SEOmoz, we've cleaned up many sites' "search results," transforming them into "more valuable" listings and category/sub-category landing pages, and have had great success recovering rankings and gaining traffic from Google.

In essence, you want to avoid the potential for being perceived (not necessarily just by an engine's algorithm but by human engineers and quality raters) as search results. Refrain from:

- Pages labeled in the title or headline as "search results" or "results"

- Pages that appear to offer a query-based list of links to "relevant" pages on the site without other content (add a short paragraph of text, an image, and a formatting that makes the "results" look like detailed descriptions/links instead)

- Pages whose URLs appear to carry search queries, e.g. ?q=seattle+restaurants vs. /seattle-restaurants

No comments:

Post a Comment